Testing microservices. Review of Building Microservices.

A few weeks ago I came across an awesome book “Building Microservices” by Sam Newman. Author covers a lot of useful topics about microservices such as testing, deployment, monitoring etc. So, I decided to write my review for the most interesting parts for me and take out notable quotes from this books.

Background

“A key driver to ensuring we can release our software frequently is based on the idea that we release small changes as soon as they are ready.”

There are a lot approaches how to test monolithic application. However, distributed system bring new impediments. Every change within one microservice can impact on other microservices and eventually breaks the system. How to be sure that microservice is ready for release and the whole system won’t be broken? Which tests should be written and which test strategies should be used?

Solution

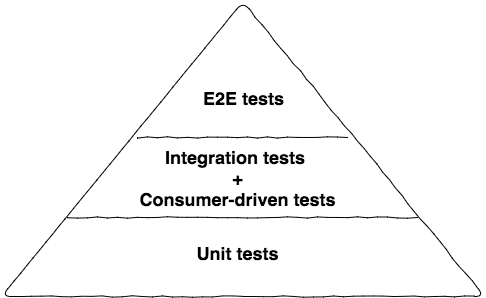

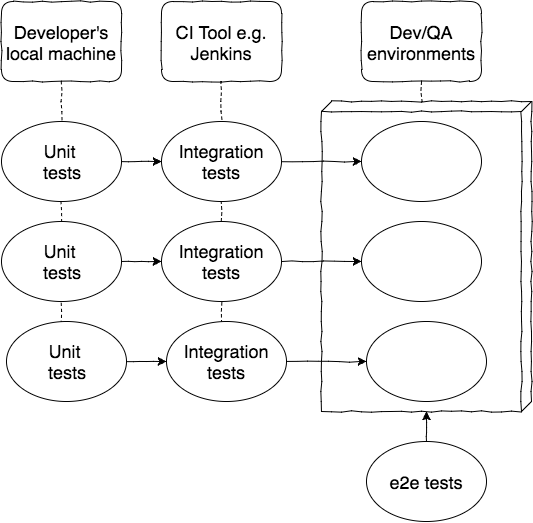

Author describes 3 levels of tests:

Unit tests

These are tests that typically test a single function or method call. The tests generated as a side effect of test-driven design (TDD). Done right, they are very, very fast, and on modern hardware you could expect to run many thousands of these in less than a minute. The prime goal of these tests is to give us very fast feedback about whether our functionality is good.

Integration Tests (Service)

The reason we want to test a single service by itself is to improve the isolation of the test to make finding and fixing problems faster. To achieve this isolation, we need to stub out all external collaborators so only the service itself is in scope

End-to-End

End-to-end tests are tests run against your entire system. These tests cover a lot of production code. So when they pass, you feel good: you have a high degree of confidence that the code being tested will work in production

Sam explains the idea of testing pyramid: “As you go up the pyramid, the test scope increases, as does our confidence that the functionality being tested works. On the other hand, the feedback cycle time increases as the tests take longer to run, and when a test fails it can be harder to determine which functionality has broken.”

But how to implement end-to-end tests? We need to deploy multiple services together and then run a test against all of them. Usually, there is a separate application (extra microservice) which contains e2e tests. The system is a black-box for such tests. And I will suggest to use BDD scenarios and appropriate frameworks (e.g. cucumber, jbehave etc).

In that case, business provides BDD scenarios, QA team writes BDD tests and runs them against the black-box. Thereby developers can change the structure of system in future without impact on these tests.

However, there is a warning from author – “Be careful with amount of end-to-end tests. Show me a codebase where every new story results in a new end-to-end test, and I’ll show you a bloated test suite that has poor feedback cycles and huge overlaps in test coverage.”

Useful links

- Building Microservices by Sam Newman

- http://stackoverflow.com/a/7876055

microservices and integration